College Career Fairs for Engineers

We all know the career fair drill, you push your way through a mass of humanity to stand in line for a company that you hope will offer you a job. After five or ten minutes of waiting, you finally get to yell at an engineer over the din of hundreds for a few minutes, barely enough time to introduce yourself, get asked a few questions, and maybe even ask a few questions yourself. Hardly enough time to make a lasting impression. I attended a few college career fairs earlier this year while recruiting for my company, and throughout the process I realized that a vast number of students were completely misusing their oppertunities at the fairs.

Now, college career fairs present a fantastic opportunity for students. Whereas applying to a job online simply places your name into a bucket with dozens, if not hundreds, or even thousands of faceless applicants, a career fair allows students to cut through the HR and automated resume screener bullsh*t and immediately talk directly with a real life engineer, thereby allowing the student to show off their skills and express their interests. The importance of this opportunity cannot be overstated.

When I was a student, I had a few companies start scheduling me for interviews on the spot when I impressed their engineers at the fair. Now this isn't my company's style, but for a few people, I've scribbled on the back of their resumes phrases such as, "AWESOME, HIRE HIM NOW," and "AMAZING!!." I can assure you those resumes went to the top of the list.

On… Many Things

I've had quite a bit of time to dwell on things during my unscheduled sabbatical from my dearest web server, so this is going to be a doozy of a post.

On Graduating From College

I never really yearned for high school to end, but after three years of college, I began to grow restless of school. By the middle of my fourth year, every fiber of my being was screaming to be released from learning. I was tired of spending hours in the library studying things that seemed hopeless to understand, tired of 8 AM lectures on circuits, tired of long sleepless nights writing code, tired of worrying about grades, and just tired of being tired. It was a bittersweet relationship that I had with school; I loved UCLA and I knew that I would miss it after I left, but I was desperate for a break from learning.

I made my way through high school with a nagging voice at the back of my mind telling me that I needed to succeed in order to get into a good college. Upon entering college, that voice fell silent and I grew complacent. But I was soon spurred forward, partially by a true desire to learn and to a lesser degree, a niggling realization that a sub-par performance in school wasn't going to get me an even passable job after graduation. The fact is, I've spent most of my life working towards the moment when I would graduate from college, a goal that I always thought was far off in the distant future and therefore never considered the consequences of. So after I moved out of my apartment in LA, a new thought wormed its way into my mind, asking, "now what?" And its a question that I have yet to properly resolve to my own personal satisfaction.

Others brought up in similar scenarios as mine undoubtedly have the same thought running around in their heads. From this point in life, there ceases to be as many milestones set out for us, if any at all. The goal now (as I try to tell myself) is to live fully and pursue that which inflames our passions, refusing to accept the limitations ahead.

We Return for Finals

Finals week; that wonderful time of the season characterized by late nights, early mornings, hours of unbridled fun in the library, and most of all, incredible amounts of procrastination. I recently decided that every time I get too restless to study, I should get up and run a few miles. Monday turned out to be a particularly restless day, as I ended up running ten miles in the afternoon (two full perimeter runs (four miles each), and a truncated north-campus loop (two miles)). The end result was that I got very little work done and utterly failed at curing my restlessness, as each successive trek through the outside world only served to fuel my desire to be away from my studies. Obviously, I have since abandoned that failure of a studying technique.

But onto other more exciting news. My roommate started the process of moving out yesterday and so today I learned that he owned all the knives in our apartment. Which is why I made lunch today using nothing but a pair of chopsticks, and an ancient pocket knife. Presumably, for the next few days I will be eating a lot of instant noodles. Which is fine, because that was essentially my plan anyway.

I was wandering through the deluge of old data that I've accumulated on my disks and discovered a high school recording of me playing Gershwin's "I Got Rhythm" piano concerto, accompanied by the community orchestra (which was, to put it nicely, not a very good orchestra). I was initially mildly impressed by the solid skill and slight bravado with which I performed, but then immediately became extremely depressed when I realized how much my piano skills have atrophied over the last four years.

I bought a new phone recently (Nexus One) and have been discovering the joys afforded by the marvels of modern technology. Perhaps the most shocking of its features (when compared to my previous phone), is that it doesn't lock up every time the screen is touched. The Nexus One, being a rather older model, superceded by various other phones including the Nexus S, was relatively quite cheap (only $260 unlocked and without contract), compared to the ~$600 it might cost to buy a current, unlocked, contract-free, top-of-the-line model. Nevertheless, $260 is still $20 more then what I paid for the stolid and trustworthy IBM x60s that I'm currently typing away at. There's been a lot of complaints on the tubes (i.e. from guys like ESR) about how American cellular providers are racking up exorbitant profits by locking consumers into unfair contracts that span several years. But the fact of the matter is, modern smart phones are prohibitively expensive and the only way most Americans are even going to consider buying them, is if they don't have to realize the full price of the product up front. Thus contracts that appear to partially subsidize the cost of the phone, but in reality simply act as a payment plan. Its the American way; buy crap you don't really need with money you don't have right now.

But I digress. Yesterday night, we realized that the window in our room opens. This idea, which has apparently been gestating in our minds for the past year, only to hatch a few days before we move out, was still quite welcome as it served to ventilate our every-so stuffy room.

I'll be doing undie-run tonight, despite the looming presence of two more finals in the very near future. But I figure it'll be worth it since I've never gone and this will be my last chance. Amusingly enough, they have yet to change the picture for the undie-run Facebook group in several years. It remains a picture someone took my first quarter here, containing a streaming mass of humanity with my then-roommate in the middle, wearing nothing but his underwear and for some inexplicable reason, a tie.

And with that, I return to other acts of procrastination.

Classess, and Stuff

Its been a distressingly long time since I last blogged, but thats OK because I'm the only one that cares. My numerous lamentations now aired out, we move onto the good stuff (or bad stuff, depending on your point of view...)

So this last quarter, I found my digital design course consuming an inordinate amount of time, and with each passing week the amount of time I spent in lab per day increased at a logarithmic rate (logarthmic, because there are only so many hours a person can spend in lab before there simply ceases to be hours in the day). The premise of the class was quite simple: spend the first few weeks going through cookie-cutter labs whilst learning the ins-and-outs of the tools and VHDL, and then spend the rest of the quarter builidng your own personal project on a FPGA. My group of three proposed to create a system where a user could drive an iRobot Create by mimicking driving motions in front of a camera, an idea perhaps less ambitious then other groups in the class but nonetheless quite daunting. Unlike Microsoft's super-amazing-funtastic-galactic-awesome Kinect, our system made no use of fancy infrared projectors. Instead, we simply required the user to wear blue gloves or grip a stick tipped in blue, and place their foot over a piece of green cloth. Take a look at our demo:

You'll notice also, that on the disply we drew solid bounding boxes over the blue and green objects in the frame.

This was done almost entirely on the FPGA and was written in VHDL, with only communication over serial port handled by the PPC405 CPU. So yeah, it took a really long time.

Me and Tex

Someone once told me that all the "cool kids" use Latex to write up all their technical documents. Due to my desperate desire to be part of the cool crowd, I immediately began reading through Latex tutorials. At the time, the only formal document I was working on was my resume, so I rewrote my resume in Latex, and because I wanted to have lots of fancy formatting, it took me a surprisingly long time to write it up. The end result was a resume that looked pretty decent, and one very annoyed college student who decided that Latex was far too aggravating to be used on a regular basis for such trivial things like lab reports or resumes. So for the longest time, my resume was the only document I used Latex for.

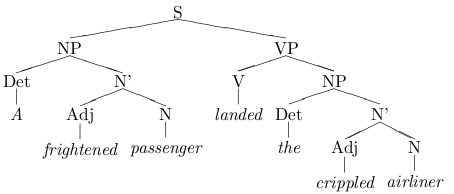

Now lets put the way-back machine in reverse and jump forward one year, to last week, when I was typing up my linguistics homework in VIM. We were working with sentence trees (think back to your automata/algorithms class, where you used a formal grammar to show that a string is an element of a language) and unfortunately, there is no good way to draw graphs, or much less trees, in plain ASCII text. Lucky me, Google-fu revealed that there are numerous Latex packages that will allow a writer to knock together some pretty spiffy graphs. Long story short, it took me a really (really (really (really))) long time to write up my linguistics homework using Tex. I was up till 4 AM, and most of that time was spent making sentence trees. Granted, I spent a pretty good chunk of time relearning Latex, learning how to use some crazy packages I found out in the Internet, and going "Ooooo! Look what I can do!"

Ooooh! Pretty tree!

The end results: the loveliest homework assignment I've ever turned in, and one tired college student.

Funny story, the next day, I wrote up my algorithms homework in Latex. This week, I wrote part of my circuits lab report and my new linguistics homework in Latex.

I run Linux almost exclusively and its often quite difficult for me to create nice looking documents with fancy charts, tables, and nicely notated equations, because quite frankly, Open Office just doesn't cut it when I need to make stuff look nice. Not only that, but I still haven't bothered to install Open Office on my laptop. The end result is that I write most of my lab reports in Microsoft Office on the school computers. Now I'll admit, I do bash on Microsoft on occasion, but I actually enjoy using Microsoft Office 2010. Office's new equation editor is simple to use and it creates really pretty looking equations, and the graphs now have nice aesthetically pleasing colors and shadows (unlike the old-school graphs). So really, there hasn't yet been a reason for me to seek out an alternative to MS Office. Until now of course.

Latex allows me to create epically awesome, professional grade documents in about the same amount of time as it takes in MS Office. Some tasks are actually faster because I don't have to fight the editor program, which usually thinks it knows better then me. I can now also work on my documents from the comfort of my own desk, or on my laptop while I'm chilling in the hallway between classes. Plus, documents marked up in Latex just look better in general then your typical MS Word doc, due partially to more consistent formatting rules and a more structured layout.

So join the rest of us cool kids! Jump on the Latex bandwagon! After all, how could something created by Donald Knuth possibly be bad?

Smashing the Stack for Extra Credit

(So this one is a little old... I have a habit of writing up drafts, stashing them away to be uploaded later, and then completely forgetting about them.)

A quick intro to buffer overflow attacks for the unlearned (feel free to skip this bit).

I would highly recommend reading AlephOne's Smashing the Stack for Fun and Profit if you really want to learn about buffer-overflow attacks, but you can read my bit instead if you just want a quick idea of whats its all about.

Programs written in non-type safe languages like C/C++, do not perform bounds checking on memory when doing reads and writes, and are therefore often to susceptible to what is known as a buffer-overflow attack. Basically, when a program allocates some array on the program stack, the possibility exists that if the programmer is not careful, her program may accidentally overwrite the bounds of the array. One could imagine a situation where a program allocates N amounts of bytes on the stack, reads in input from stdin using scanf (which terminates reading input when it hits a newline) and stores the read bytes in the allocated array. However, the user sitting at the terminal might decide to enter in more then N bytes of data, causing the program to overwrite the bounds of its array. Other values on the stack could then be unintentionally altered which could cause the program to execute erratically and even crash.

But a would-be attacker can do more then just crash a program with a buffer-overflow attack, they could potentially gain control of the program and even cause it to execute arbitrary code. (By "executing arbitrary code" I mean that the attacker could make the program do anything.) An attacker can take control of a program by writing down the program stack past the array's bounds and changing the return address stored on the stack, so that when the currently executing function returns control to the calling function, it actually ends up executing some completely different segment of code. At first glance, this seems rather useless. But an attacker can set the instruction pointer to anything, she could even make it point back to the start of the array that was just overwritten. A carefully crafted attack message, could cause the array to be filled with some bits of arbitrary assembly code (perhaps to fork a shell and then connect that shell to a remote machine) and then overwrite the return address to point to the top of the overwritten array.

A problem with the generic buffer overflow attack is that the starting location of the stack is determined at runtime and therefore can change slightly. This makes it difficult for an attacker to know exactly where the top of the array is every single time. A solution to this, is to use a "NOP slide," where the attack message doesn't immediately begin with the assembly code but rather begins with a stream of NOPs. A NOP is an assembly language instruction that basically does nothing (I believe that it was originally included in the x86 ISA to deal with hazards) so as long as the instruction pointer is reset to point into somewhere the NOP slide, the computer will "slide" (weeeeee!!!!) into the rest of the injected assembly code.

Sounds simple so far? Just you wait....

The trials and tribulations of my feeble attempt to "smash the stack."

The professor for my security class threw in a nice little extra credit problem on a homework assignment last quarter. One of the problems in the homework asked us to crash a flawed web-server using a buffer overflow attack, but we could get extra credit if we managed to root the server with the buffer overflow. Buffer overflow attacks on real software are nontrivial, something my professor made sure to emphasize when he told us that in his whole experience of teaching the class, only one student had ever successfully rooted the server. Now Scott Adams, author of Dilbert, mentioned in one of his books that the best way to motivate an engineer, is to tell them that a task is nearly impossible and that if they're not up for the challenge, then its no big deal because so-and-so could probably do it better. This must be true, because after discussion section, I went straight to a computer and started reading Smashing the Stack for Fun and Profit, intent on being the second person to "smash the stack" in my school.

I was able to crash the server software within less then one minute of swapping the machine's disk image in, as it was a ridiculously simple task to guess where an unbounded buffer was being used in the server code and force a segmentation fault. It took me a few more minutes to trace down the exact location of where the overflow was occurring (there was a location in the code where the program would use strcat() to copy a message), but as soon as I did, I booted up GDB and started gearing up for some hard work.

Things that Happened

What I did this weekend:

- procrastinated

- cooked enough food to last me to the middle of the week

- ate all the food I cooked (I was hungry)

- tried to sleep in but failed miserably (I ended up waking up at 7:50am)

- rode my bike 25 miles, stopped and stared at the houses in Brentwood that probably cost more money then I'll ever make in ten lifetimes

- procrastinated by looking at various electronic gadgets on-line that I have no need for and couldn't possibly afford

- tried to work on my lab but was distracted by food

- sat in the computer lab for about three hours, wrote two lines of code, and tried unsuccessfully to help someone with his Linux troubles

- procrastinated by doing laundry and then sewing up the holes in my black jeans (there were a lot more holes the I realized)

- tried to study but somehow ended up watching old Justice League Unlimited episodes on youtube

- finally got my butt in gear around 7pm on Sunday night and hit the library

On another note, I added a basic captcha to the comment box on this blog in order to reduce the amount of spam Akismet had to handle (Akismet is great, but it does occasionally mark stuff incorrectly). Amazingly enough, a few spam bots are making their way past my captchas! Modern image processing is impressive stuff...

OCaml Infix Notation and Parenthesis

So when I first picked up LISP, I found myself hating everything about the language, from its distinctively un-C-like functional style, to the inordinate number of parenthesis required by the syntax. But before long, I found myself accidentally writing my math homework in prefix notation and putting parenthesis around all of my sentences, just out of pure habit. With time, I began to find the LISP style more relaxing to develop in, and started to understand the stark beauty of the language. A few months after my first excursion into LISP, I was telling people how LISP was the most beautiful language ever created.

Now, enter OCaml, a functional language free from legacy cruft, and inspired in some form from LISP. I thought I would love being able to develop in a LISP-like language without having to worry about ending statements with a dozen end-parenthesis (which I thought to be LISP's only big syntax flaw), but I found the lack of required parenthesis initially quite awkward. Yes, there are a lot of insipid little parenthesis in LISP, but the point of them is to clarify the code and they do! Parenthesis are what allow LISP to have such simple and easy to understand syntax. (Lets face it, Ocaml just doesn't have the "Its full of cars!" sort of easily understood syntax.) Of course, since OCaml makes parenthesis optional in most cases, one could simply add parenthesis to everything in OCaml, much as one would in LISP. I got over the parenthesis business in OCaml quite quickly, although I still miss them quite a bit.

The one thing I could never get over however, was the weird way OCaml uses built-in operators (like '+', '-', '/', etc.) in infix notation, but has all other functions used in typical prefix notation. By enclosing an operator in parenthesis, it can then be used in prefix notation, but this is a little clunky. I would think that it would be better to force all aspects of the language to follow the same common rules in order to reduce confusion, but the OCaml designers were apparently following a different line of reason from mine.

Although OCaml's odd mix of infix and prefix notation has remained a thorn in my side whenever I lay hand to keyboard to bang out some OCaml code, I've nevertheless managed to gain a good understanding of the language. I've also started to find the usefulness of the language, but my heart still pines for LISP...